Affective computing is a rapidly growing, interdisciplinary field focused on the study and development of products and systems that recognize, interpret, process, and simulate human affects1—in other words, technology aimed at identifying or gauging emotions, feelings, or moods. It typically uses artificial intelligence (AI) to analyze physical signals such as facial expression, tone of voice, body language, and biological signals such as heart rate. The goal of many affective computing endeavors is to improve human-computer interaction, in part, by enabling computers to better understand human emotions so that they can respond appropriately.

1 Tao, Jianhua; Tieniu Tan (2005). "Affective Computing: A Review". Affective Computing and Intelligent Interaction. LNCS 3784. Springer. pp. 981–995. doi:10.1007/11573548.

What's New

Affective computing is a nascent and relatively unproven research area, yet affective technologies are increasingly appearing in mainstream consumer and enterprise software and hardware products. Beyond the consumer sector, companies are rolling out the use of these technologies to analyze online customer reviews, monitor employee productivity, assess job applicants on their ‘employability score,’ or even evaluate if a child is paying attention in school. According to the 2020 Global Forecast to 2025 report, the affective computing market is projected to grow from US$28.6 billion in 2020 to US$140 billion by 2025.

Affective technologies are often marketed as “emotion detection” or “emotion analysis,” which overstates the technology’s capabilities and can make them seem more powerful than they are. Indeed, many of these products and services claim to provide accurate readings of an individual’s true emotional state. However, research has shown that affective technologies can only infer what kind of emotion or mood an individual is expressing based on the signals available. In other words, it doesn’t know what you are feeling—it’s guessing what you are feeling based on what you show to the outside world.

Despite oft-cited limitations of the technology by researchers and developers alike, we are increasingly seeing this technology make its way into mainstream commercial devices and systems, including everything from hiring systems to wristwatches to healthcare robots providing care for the elderly. This burgeoning market presents immense opportunity to leverage emotion-related data for business and government use but also comes with significant risks.

Signals of Change

In August 2020, Amazon introduced a wristband for health and fitness tracking that can also track users’ emotions. Amazon’s Halo wearable tracks people’s body fat percentages, sleep temperature, and their emotional state. The optional ‘Tone’ feature listens to a user’s voice and analyzes the information gathered throughout the day, presenting a summary of how the user felt and showing times when the user felt elated, hesitant, energetic, etc.

Over the next two years, multiple technology companies plan to roll out emotion-detecting systems for cars in partnership with automakers. New car sensors are being marketed as a safety feature, but the technology can be used to monitor and harvest emotional data from drivers. Technology companies are laying out business strategies to record every movement, glance or emotion from a driver and sell the resulting data.

Academics in Italy have published a paper introducing a methodology for the recognition of crowd emotions from crowd speech and sound in mass events. The sounds from the crowd help detect emotional content during mass events that can be applied to identify mass panic or riots to alert in case of emergency. They can also help to automate video annotation describing spectators’ emotions at sporting events, concerts, and other gatherings.

Fast Forward to 2025

Working in retail has changed a lot in recent years as we started tracking customer employee interactions...

The Fast

Forward

BSR Sustainable Futures Lab

Business Implications

With affective technologies becoming ever more available, exponential growth in use is expected, triggering needs for regulation. Different regulatory environments present challenges to technology adoption. The E.U. errs towards a more stringent approach, while the U.S. and Asia have historically been more permissive of these applications.

The use of affective technologies, both through new and existing products and services, will significantly expand the types of data collected, as well as the associated opportunities to use data to develop or market business products and services or inform government programs and interventions.

Early adoption areas for affective computing include advertising and marketing, where the perceived emotions of an individual may trigger online advertisements for certain products or services or might direct a sales associate to pitch a particular item.

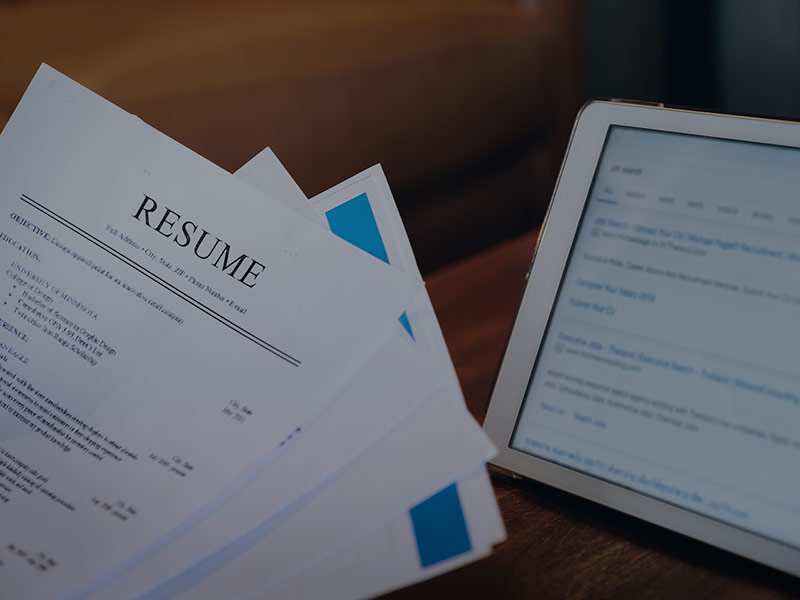

These technologies may also be used to track customer-employee interactions in retail settings for training purposes or to identify potentially violent behavior in a crowd. Use cases are already prevalent throughout the workplace, where affective technology can be used to measure perceived employee productivity and efficiency, evaluate job candidates, or monitor the alertness of factory workers.

We are also seeing these technologies increasingly used in education, primarily to gauge if students are paying attention and engaging with the lesson or to assist children with cognitive disabilities in identifying the affects of others.

Sustainability Implications

The limitations and potential misuse of affective computing raise critical questions about the integration of this technology. For sustainability and human rights teams, there are reasons to be concerned if the technology is not accurate, which may lead to real-world harm when decisions are made based on inaccurate assessments. But there are also reasons to be concerned if the technology is accurate, specifically, if it can be deployed to manipulate individuals’ emotional state and inner consciousness and perceptions or even to influence their behavior.

The use of affective technologies in both public and private spaces could significantly impact human dignity and autonomy, alongside other rights such privacy and freedom of thought, if deployed to manipulate individuals’ emotional state and inner consciousness and perceptions, or even to influence their behavior.

As companies begin to explore affective technologies, corporate sustainability and human rights teams should take steps to assess the impact and potential impact of the technologies. In addition, they will need to develop policies for their development, integration, deployment, and use.

Affective computing holds the promise of powerfully attuning goods and services, but is also fraught with human rights and ethical risks. If inaccurate, it could result in faulty decisions in high-stakes areas like education and the workplace. If accurate, it might be used to manipulate individuals without their consent. To realize its potential, affective computing will need to be deployed within a foresight-driven and human rights-based ethical framework.

Because these technologies can be used to scan people in public spaces, the issue of consent will also be front and center for these teams, as will the larger issue of what ‘informed consent’ may mean in this context. Storage of this information is also an issue given privacy considerations that could be magnified.

Furthermore, academics and developers have begun to raise the alarm around the ethics and human impacts of affective technology. For one, these technologies are still far from reliable at accurately assessing individuals’ expressed affect. There is significant variation in the way people express emotions, both at the individual level and across cultures, and as a result these technologies often have high error rates.

Error rates are often even higher for certain segments of the population, such as people of color or women. This can lead to discriminatory technologies (for example, a product that is able to identify the emotions of white men, but not black men) in addition to discriminatory practices in how and where the technologies are used.

These considerations, and potential harms, must be addressed by sustainability and human rights teams early, in collaboration with the relevant internal stakeholders working on equity and inclusion and with the individuals responsible for either developing or procuring these types of technology.

Beyond the potential for discrimination, these technologies are often limited in the affects they can detect—most assert that they can identify around six or seven emotions—and many cannot detect more than one at a time. Because of these limitations, affective technologies can cause real-world harm when decisions are made based on inaccurate assessments, particularly in high-stakes areas such as hiring and education. Companies deploying affective technology in these and other high-risk settings must conduct due diligence to identify and address these harms.

![]()

Previous issue:

Business Reckoning on Reparations

![]()

Next issue:

A Hydrogen-Fueled Future